The Impact of Focus and Context Visualization Techniques on Depth Perception in Optical See-Through Head-Mounted Displays

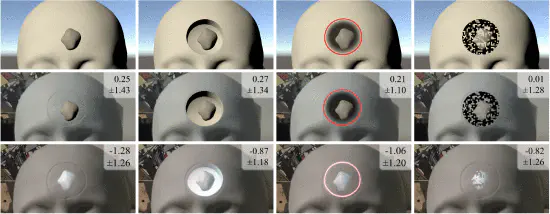

Base implementations of focus and context visualization techniques (top row) and their appearance in video- (mid row), and optical- (bottom row) see-through head-mounted displays. From left to right: Baseline overlay without contextual layer, Virtual Window, Contextual Anatomical Mimesis, and Virtual Mask. Mean and standard deviation of corresponding alignment errors of study 1 are presented in centimeters. The OST images are captured using a smartphone camera placed at the eye position. Contrast and brightness have been adjusted for a faithful impression of the overlay as observed by the user.

Base implementations of focus and context visualization techniques (top row) and their appearance in video- (mid row), and optical- (bottom row) see-through head-mounted displays. From left to right: Baseline overlay without contextual layer, Virtual Window, Contextual Anatomical Mimesis, and Virtual Mask. Mean and standard deviation of corresponding alignment errors of study 1 are presented in centimeters. The OST images are captured using a smartphone camera placed at the eye position. Contrast and brightness have been adjusted for a faithful impression of the overlay as observed by the user.Abstract

Estimating the depth of virtual content has proven to be a challenging task in Augmented Reality (AR) applications. Existing studies have shown that the visual system makes use of multiple depth cues to infer the distance of objects, occlusion being one of the most important ones. The ability to generate appropriate occlusions becomes particularly important for AR applications that require the visualization of augmented objects placed below a real surface. Examples of these applications are medical scenarios in which the visualization of anatomical information needs to be observed within the patient’s body. In this regard, existing works have proposed several focus and context (F+C) approaches to aid users in visualizing this content using Video See-Through (VST) Head-Mounted Displays (HMDs). However, the implementation of these approaches in Optical See-Through (OST) HMDs remains an open question due to the additive characteristics of the display technology. In this article, we, for the first time, design and conduct a user study that compares depth estimation between VST and OST HMDs using existing in-situ visualization methods. Our results show that these visualizations cannot be directly transferred to OST displays without increasing error in depth perception tasks. To tackle this gap, we perform a structured decomposition of the visual properties of AR F+C methods to find best-performing combinations. We propose the use of chromatic shadows and hatching approaches transferred from computer graphics. In a second study, we perform a factorized analysis of these combinations, showing that varying the shading type and using colored shadows can lead to better depth estimation when using OST HMDs.